Adaptive bitrate streaming, while commonplace and in high demand across apps and websites, introduces a layer of complexity that developers must navigate. In this evolving landscape of streaming services, the majority of media players have adopted the strategy of loading data in chunks. However, this method raises questions: Does it truly address issues such as low bandwidth? Is it efficient when a high-quality video continues buffering due to poor connectivity? And what happens when there’s an uptick in bandwidth – does the system continue loading a low-quality video, failing to adjust to the improved conditions? The true challenge lies in answering these queries and refining the adaptive bitrate streaming process.

The intricacies of adaptive bitrate streaming and its issues are concerns typically addressed at the production level, where quality and user experience are paramount. For those willing to delve into these complexities, I’m about to provide an in-depth exploration of video transcoding, encoding, and buffered streaming. And to ensure this understanding is practical and actionable, I’ll accompany it with a straightforward NodeJS implementation. After all, true learning stems from active experience and practice. So, let’s delve into the realm of optimized video streaming, where theory meets application.

Just a heads up – due to the technical nature of this topic, this article will be quite extensive. I’ve aimed to make it as descriptive and comprehensive as possible. To maintain conciseness, I’ll occasionally provide reference links for further exploration. Remember, I highly encourage questions and discussions, so feel free to leave any queries in the comment section. I’m here to help make this complex journey through video streaming technology as clear and informative as possible.

In due course, I will guide you through the process of creating a standalone server in NodeJS. This server will manage the tasks of transcoding, encoding, and generating video chunks at varying bitrates. Moreover, it will generate an extended M3U playlist. This hands-on approach will allow you to directly engage with these technologies, providing invaluable insight and experience

The repository link is at the end of the blog 😉

What is HLS Adaptive bitrate streaming anyways?

HLS stands for HTTP Live Streaming. Why we needed the HLS protocol? Well, Apple realized the need for it long before 2009 (When they released HLS) that conventional ways of streaming are not optimal and are way too slow. We needed some way to automate the auto-quality switches for the streaming videos based on the bandwidth of the end users. This process is widely implemented nowadays by almost all video streaming providers like Netflix, YouTube, Instagram, and Facebook as well as Live conference providers like Skype, Zoom etcetera. etcetera.

Update: Netflix and Youtube have moved to DASH. Read here.

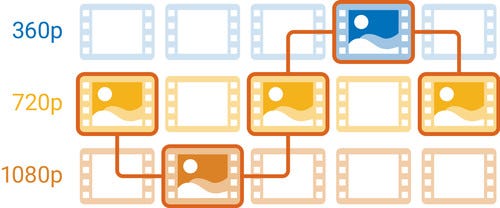

HLS works by breaking down all the streams into a sequence of small HTTP-based file downloads, each chunk loading a small part of the overall video rather than streaming down the whole video. This is achieved by encoding video at different bit rates and is sent to the receiver via an extended M3U playlist.

I am not going to dive into the architecture and design of the protocol. I will drop a few links in the references section and you can take a read.

FFmpeg

We will use FFmpeg command-line tools. FFmpeg is a cross-platform tool to record, convert and stream audio and video. Visit this page to download the binary for Linux, Windows, or OS X distro. I am using OS X.

Here is the shell script that you can use to create VOD HLS for a simple video. After downloading this script, You will be able to use it as follows:

$ bash create-vod-hls <input-video-file> <output-folder>

And this will generate the following output renditions:

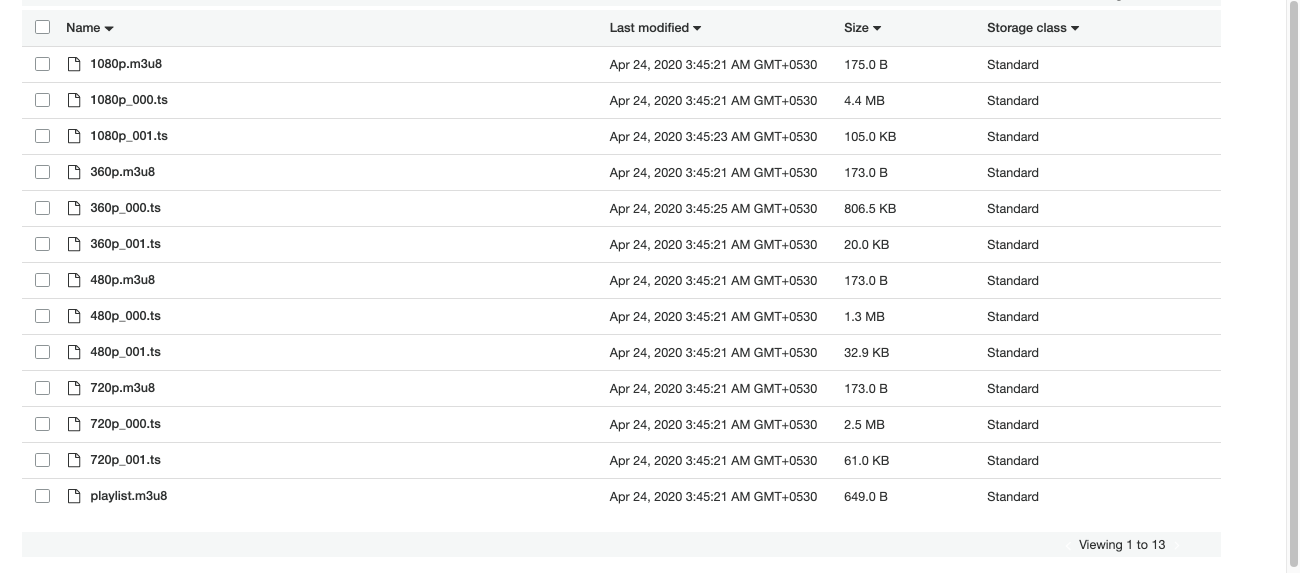

Now, the file-generated playlist.m3u8 is an extended M3U playlist file and is directly streamable over HTTPS.

Diving deep into the script

Before processing the conversion, Let’s find out a little bit more about our source file. To inspect the properties of the source file, run the following command:

$ ffprobe -hide_banner <source_video_file>

And this will give you the following output for a clip shot on iPhone OSX:

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'VID_20190725_220619.mov':Metadata:major_brand : qtminor_version : 0compatible_brands: qtcreation_time : 2020-04-23T18:27:04.000000Zlocation : +30.7457+076.7352/encoder : Googlecom.apple.quicktime.location.ISO6709: +30.7457+076.7352/Duration: 00:00:06.04, start: 0.000000, bitrate: 6547 kb/sStream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p(tv, smpte170m, progressive), 1080x1920 [SAR 1:1 DAR 9:16], 6416 kb/s, 59.19 fps, 59.19 tbr, 10536 tbn, 118.38 tbc (default)Metadata:creation_time : 2020-04-23T18:27:04.000000Zhandler_name : Core Media Videoencoder : 'avc1'Stream #0:1(eng): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, stereo, fltp, 128 kb/s (default)Metadata:creation_time : 2020-04-23T18:27:04.000000Zhandler_name : Core Media Audio

The file is identified as a QuickTime video file, 6 seconds long, overall bitrate of 6547 Kb/s containing one video stream of 1080×1920 59.19fps in h264 codec and one AAC audio stream 44kHz and 128 Kb/s.

Now, we have to handle the multi-bitrate conversion of the following video.

The command to build a rendition is as follows:

$ ffmpeg -i <file_name> -vf=w=1080:h=1920 -c:a aac -ar 48000 -b:a 128k -c:v h264 -profile:v main -crf 20 -g 48 -keyint_min 48 -sc_threshold 0 -b:v 2500k -maxrate 2675k -bufsize 3750k -hls_time 4 -hls_playlist_type vod -hls_segment_filename video/720p_%03d.ts video/720p.m3u8

-i : specified the input video file

-vf : Scaling factor. Optionally, you can also allow the command to let you preserve the aspect ratio. This fails for portrait videos, hence omitted from the command above. following is the command if you need to keep the aspect ratio –vf=w=720:h=1280:force_original_aspect_ratio=decrease

-a:a, -ar, -b : Set the audio bitrate and codecs

-c : Set the video codec to H264.

-profile : setting this to main will add support for modern devices.

-crf : Constant Rate Factor. With x264 and x265, you can set the values between 0 and 51, where lower values would result in better quality, at the expense of higher file sizes

-g, -keyint_min : specifies the minimum length of GOP (Group of Pictures). This is because the encoder might decide that it makes sense to add a keyframe before the keyint value, so you can put a limit. Create keyframe (I-frame) every 48 frames (~2 seconds) — will later affect correct slicing of segments and alignment of renditions. Find more details.

-b:v -maxrate -bufsize : Limit video bitrate, these are rendition specific and depend on the content type.

-hls_time : Segment duration in seconds.

-hls_playlist_type : adds the #EXT-X-PLAYLIST-TYPE:VOD tag and keeps all segments in the playlist.

-hls_segment_filename : Define segment filename.

video/720p.m3u8 : Path of the playlist file — Also generate an output HLS (.mp3u8)

This will generate a VOD HLS playlist and segments in thevideo folder. The script uses the same command to generate different renditions for different video formats. Additionally, the modified script also adds the S3 URLs to the m3u8 playlist so that the resources could be referenced from any device and also triggers a webhook to alert the server that renditions are finished for a specific video. Check the modified script here.

The sample content of the final playlist.m3u8 the file is as follows:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x360

<S3_URL>/683d3821-12cf-4f6d-9266-2f8f51857886/360p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1400000,RESOLUTION=842x480

<S3_URL>/683d3821-12cf-4f6d-9266-2f8f51857886/480p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2800000,RESOLUTION=1280x720

<S3_URL>/683d3821-12cf-4f6d-9266-2f8f51857886/720p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080

<S3_URL>/683d3821-12cf-4f6d-9266-2f8f51857886/1080p.m3u8

After uploading to S3, replace the S3_URL with your AWS S3 Endpoint or any other CDN you wish to use.

Server

Clearly, such a process cannot be carried out manually; an automated workflow is essential. We need a system that can upload videos, generate renditions and HLS VOD playlists, upload files to S3, and prepare the videos for streaming. To facilitate this, I’ve created a sample repository that manages this entire flow. For those who prefer a direct approach and wish to dive straight into the code, you’ll find the link to the repository at the end of this blog.

There are 2 APIs exposed:

API to upload the video.

This API will fetch the video request multipart/form data and saves the video temporarily on the server. It handles various checks such as valid video formats and valid video files using ffprobe. It is available as an npm package.

After that, the video is saved in the uploads directory on the server root folder:

fs.mkdirSync(path.resolve('uploads', videoId));

const videoFilePath = path.resolve('uploads', videoId, `${videoId}.${extension}`);

fs.writeFileSync(videoFilePath, data);

Then, we trigger the create-vod-hls bash script to trigger the renditions and generate the HLS VOD playlist for the uploaded video. You can check the script here. The script is triggered via spawn and all the logs can be tracked via stdout .

const createHLSVOD = spawn('bash', ['create-hls-vod.sh', videoId, extension, S3_URL]);createHLSVOD.stdout.on('data', d => console.log(`stdout: ${d}`));createHLSVOD.stderr.on('data', d => console.log(`stdout: ${d}`));createHLSVOD.on('error', d => console.log(`error: ${d}`));createHLSVOD.on('close', code => console.log(`child process ended with code ${code}`));

For production use, we might need to handle the errors and different response codes for the script. After a little bit of research, I’ve found that we can let the shell and backend communicate via either webhooks or sockets. When a small amount of data is considered like rendition status or progress, we can use Netcat to establish TCP/UDP connection with the server to share status.

After completing the renditions and generating HLS VOD playlists, the script will trigger the webhook endpoint to allow the backend to upload the generated playlist to S3.

Webhook to upload HLS VOD playlist on CDN

The last webhook API is to let the backend know that a specific video is done with processing and ready to be uploaded to the CDN. In this repository, I am using AWS S3 as CDN because it’s easy to use and set up. There is another script curl_transcoding_finished.sh which accepts the video as the first argument and triggers the webhook.

VIDEO_DIRECTORY="$1"echo "Uploading Video to CDN: $VIDEO_DIRECTORY"curl http://127.0.0.1:3000/baremetal/$VIDEO_DIRECTORY

After successfully uploading the content to S3, The directory will look like this

Now, sharing the HTTP URL playlist.m3u8 for adaptive bitrate streaming will start streaming the video. The streaming. will start the buffer from the base quality of 360p and will load further chunks based on bandwidth.

A Note about S3 configuration

You need S3 configurations to be defined in the .env file before running the example. S3 needs to be explicitly configured to enable writing to the S3 folders. Following are the env variables required:

# AWS configurationsAWS_ACCESSID=<YOUR_AWS_IAM_ACCESSID>AWS_SECRET=<YOUR_AWS_IAM_SECRET>S3_BUCKET=<BUCKET_NAME>S3_URL=<S3_BUCKET_URL>

If you are new to S3, you can replace the code with any CDN you wish to use. Also, If you wish to configure S3, you can follow this guide.

Testing

You can test streaming using most of the famous media players that support video streaming. A very common and cross-platform media player is a VLC media player. Download playlist.m3u8 and open it in the VLC media player.

The video will initially begin streaming at a lower quality, but as you continue streaming, the quality will gradually improve. It’s recommended to use at least a 30-second video to observe these quality changes, given our chunk size is set to 4 seconds. This will allow you to experience how adaptive bitrate streaming dynamically adjusts video quality in response to varying network conditions.

Also, you can test the streaming on HTML. Copy-paste the following HTML code into your code and replace the playlist URL with your uploaded playlist URL and you are good to go.

<link href="https://vjs.zencdn.net/6.6.3/video-js.css" rel="stylesheet"><script src="https://vjs.zencdn.net/6.6.3/video.js"></script><script src="https://cdnjs.cloudflare.com/ajax/libs/videojs-flash/2.2.1/videojs-flash.min.js"></script><script src="https://cdnjs.cloudflare.com/ajax/libs/videojs-contrib-hls/5.15.0/videojs-contrib-hls.min.js"></script><video class="video-js vjs-default-skin vjs-big-play-centered" data-setup='{"controls": true, "autoplay" : true }'><source src="<link_to_file>/playlist.m3u8" type="application/x-mpegURL">Your browser does not support HTML5 video.</video>

You can inspect the file sequence chunks requested by the client from the server after every 4-second chunk has been loaded. Use

Inspect Tools > Network

In your browser.

If you need any help and feel stuck somewhere, Let me know in the comments section. PRs are also welcome in the repository.

Repository

Here is the link to the Github repository.

References

- Repository for this article.

- FFmpeg

- Wikipedia Article about HLS.

- Apple’s Documentation for HTTP Live Streaming (HLS).

- Reference for Live video Transmuxing and Transcoding.

- Development guidelines for Android and iOS.

- Sample m3u8 playlists for testing.

- Serving video at scale.

- netcat cheatsheet.

- Configuring S3.

- Know also about Dynamic Adaptive Streaming over HTTP (DASH, MPEG-DASH).

- For more similar content, Follow me at www.theserverfault.com

Awesome Read!

This is so helpful!

Excellent post. I was checking constantly this blog and I’m

impressed! Extremely helpful information specially the last part

🙂 I care for such info much. I was seeking this particular info for a very long

time. Thank you and good luck.

This blog post has left us feeling grateful and inspired

Ahaa, its fastidious dialogue about this article here at this

website, I have read all that, so now me also commenting

here.

Howdy! Do you know if they make any plugins to assist with

Search Engine Optimization? I’m trying to

get my site to rank for some targeted keywords but I’m

not seeing very good results. If you know of any please share.

Cheers! You can read similar article here: Backlink Portfolio

I’m often to blogging and i really appreciate your content. The article has actually peaks my interest. I’m going to bookmark your web site and maintain checking for brand spanking new information.

I do not even understand how I ended up here, but I assumed this publish used to be great

I appreciate you sharing this blog post. Thanks Again. Cool.

Awesome! Its genuinely remarkable post, I have got much clear idea regarding from this post